july 2023

signal collection hub preference study

Company: Large image-sharing social media platform

Timeframe: 6 weeks

Role: Lead researcher

Stakeholders: Product team (UX Designers, UX Researchers, UX Management)

Method: Usability Testing, Preference Testing, Moderated Interviews

Tools: UserTesting, Google Sheets, Miro, Figma

OVERVIEW

PROJECT DESCRIPTION

A large social media company developed the Signal Collection Hub (SCH) to create a two-way dialogue between the platform and its users to promote control and transparency.

UserTesting partnered with the social media company to identify the strengths and drawbacks of each new design compared to the current in-product design (control). The study mainly focused on less-engaged users to determine what would help increase their engagement and enjoyment with the platform, ultimately improving relevancy and retention.

PROBLEM

In past research studies, the company identified a lack of balance between transparency, control, and self-discovery within their platform. As a result, the team created two potential designs for the updated Signal Collection Hub (“Activity Summary” and “Guided Utility”) in an attempt to improve this balance.

APPROACH

Research OBJECTIVEs

The company aimed to answer the following research objectives:

What are the strengths and drawbacks of each of the new SCH designs?

How effective, if at all, are the updated designs at communicating the downstream impact of a user’s activity?

How do users feel about the level of control available within the SCH experience?

How might the updated SCH designs influence less-engaged users’ likelihood to engage with the platform?

PROCESS

user interviews

Participant Criteria

I screened participants through UserTesting.com using the following criteria:

At least 20% male and 30% BIPOC to ensure diverse feedback

United States only

I also divided participants into two audiences (“Core” or “Less Engaged”) to understand how preferences and experiences varied based on level of engagement with the platform. Since one of the research objectives was to understand how to influence less-engaged users to interact with the platform, participants were more heavily weighted toward that audience:

Group 1: Core (n = 5)

Visits the platform at least once a month

Most recently visited the platform within the last month

Engages with and/or curates their homefeed

Group 2: Less Engaged (n= 10)

Visits the platform at most once a week

Most recently visited the platform between 1 - 6 months ago

Does not frequently engage with their homefeed and/or perceives it negatively

Test details

Given the depth of the research objectives and nuanced prototype requirements, I opted to conduct a remote, moderated usability study (60-minute in-depth interviews) with 16 participants from the UserTesting Contributor Network.

In the sessions, each participant engaged with two of the three SCH designs, strategically counterbalanced to mitigate bias. To ensure a personalized experience, each participant’s prototype was tailored to their interests prior to their scheduled session.

Sessions encompassed the following elements:

Prototype Review: Participants reviewed two out of the three SCH prototypes, providing insights into their preferences, likes, and dislikes regarding each design.

Navigation Exercise: Participants engaged in a navigation exercise, discussing their preferred methods of interacting with the prototype tabs. This aimed to uncover user behaviors and preferences regarding navigation elements.

Application and Privacy Discussion: Participants discussed application and privacy to identify sentiment around level of control. This segment included a ranking exercise where participants evaluated three potential feature surfaces, shedding light on their priorities and concerns.

Excerpts from Moderated Discussion Guide

ANALYSIS

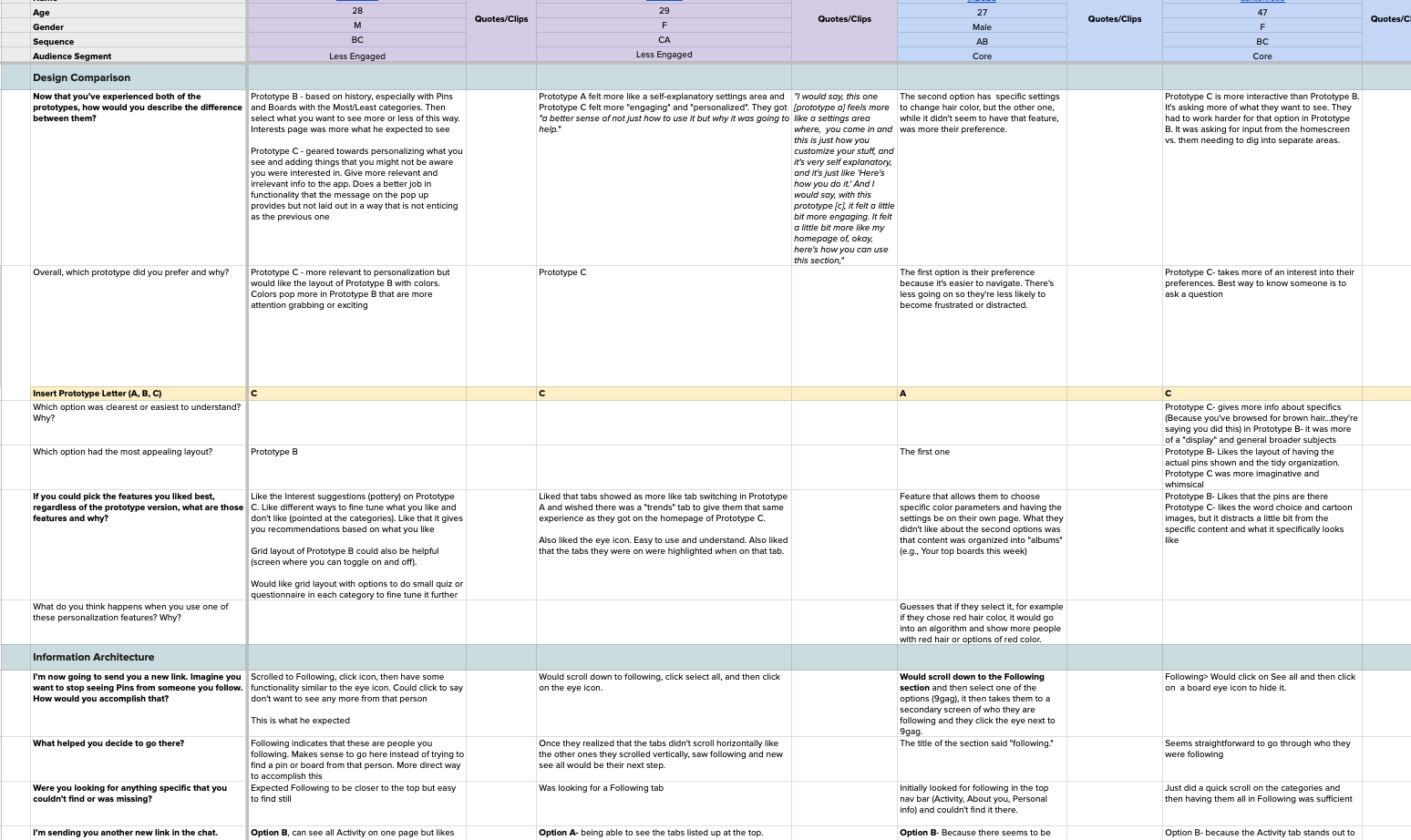

Example of annotations and preliminary analysis within Google Sheets

Employing both Google Sheets and Miro, I collaborated with a supporting researcher to analyze preferences and construct an affinity map which uncovered overarching themes.

Our process involved categorizing the strengths and weaknesses of each design, coupled with an exploration of the underlying mental models influencing these preferences.

Example of quotes and clips incorporated into deliverable slides

Additionally, we extracted key quotes and curated highlight reels to showcase prominent themes within the final deliverable.

outcome

findings

Through this process, I identified that design preferences were more heavily influenced by their personalization goals than level of engagement.

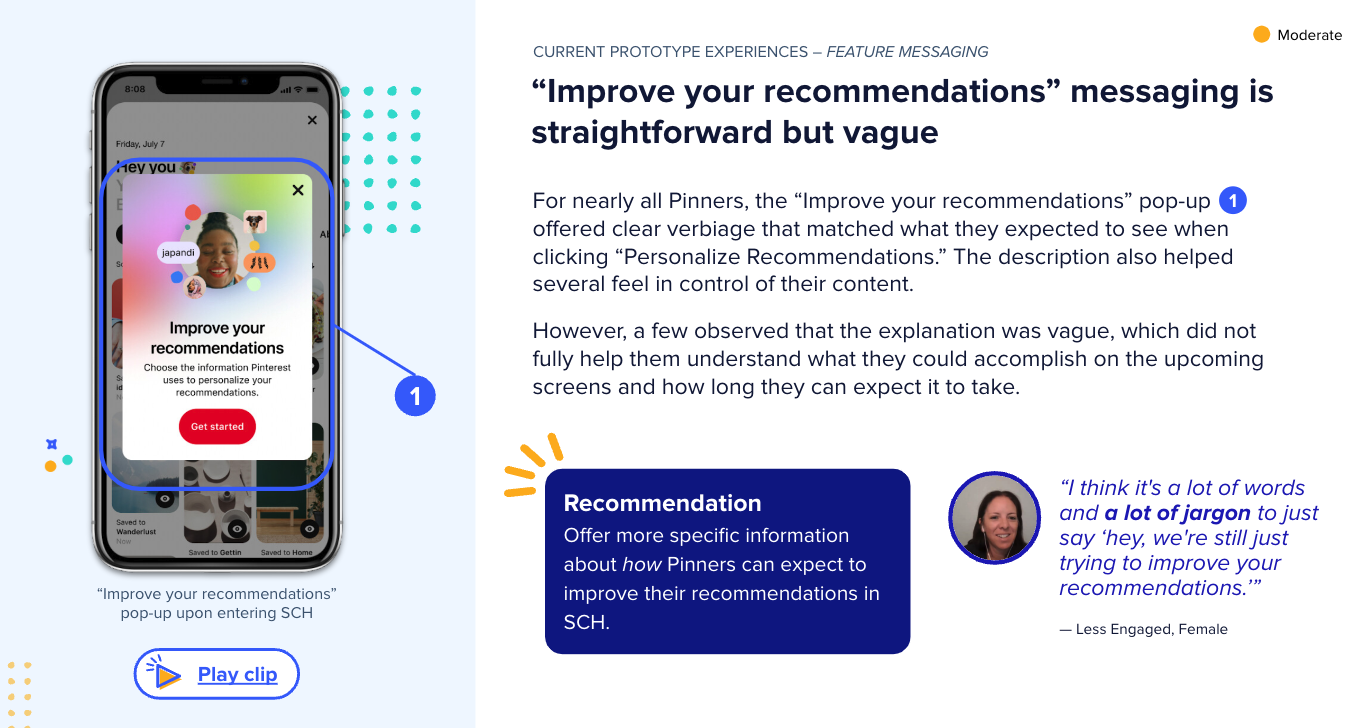

Moreover, the analysis validated that the “Control” design effectively facilitated quick preference adjustments, aligning closely with user expectations. However, aspects like engaging quizzes within the new “Guided Utility” design demonstrated a potential to stimulate users to cultivate new ideas and interact more with the platform as a whole.

Given the diverse roles our stakeholders held, I took care to present findings in multiple formats, including a summary of key findings for the UX management team and design strengths/weaknesses for designers.

Deliverable slide comparing the three designs

IMPACT

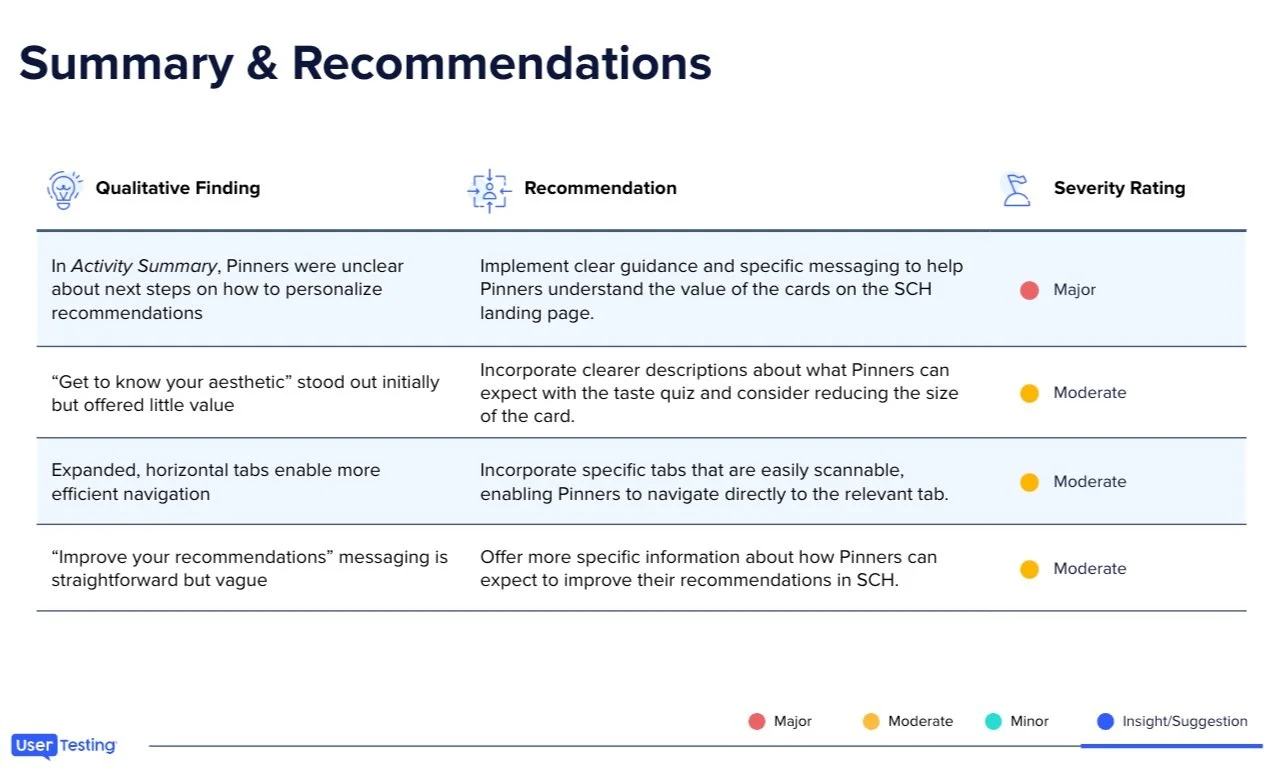

Our comprehensive analysis pinpointed key opportunities, enabling the product team to prioritize essential elements for a Minimum Viable Product.

Additionally, the deck was shared with the company’s senior management to support the funding of long-term feature goals that would drive increased value and foster greater overall platform engagement.

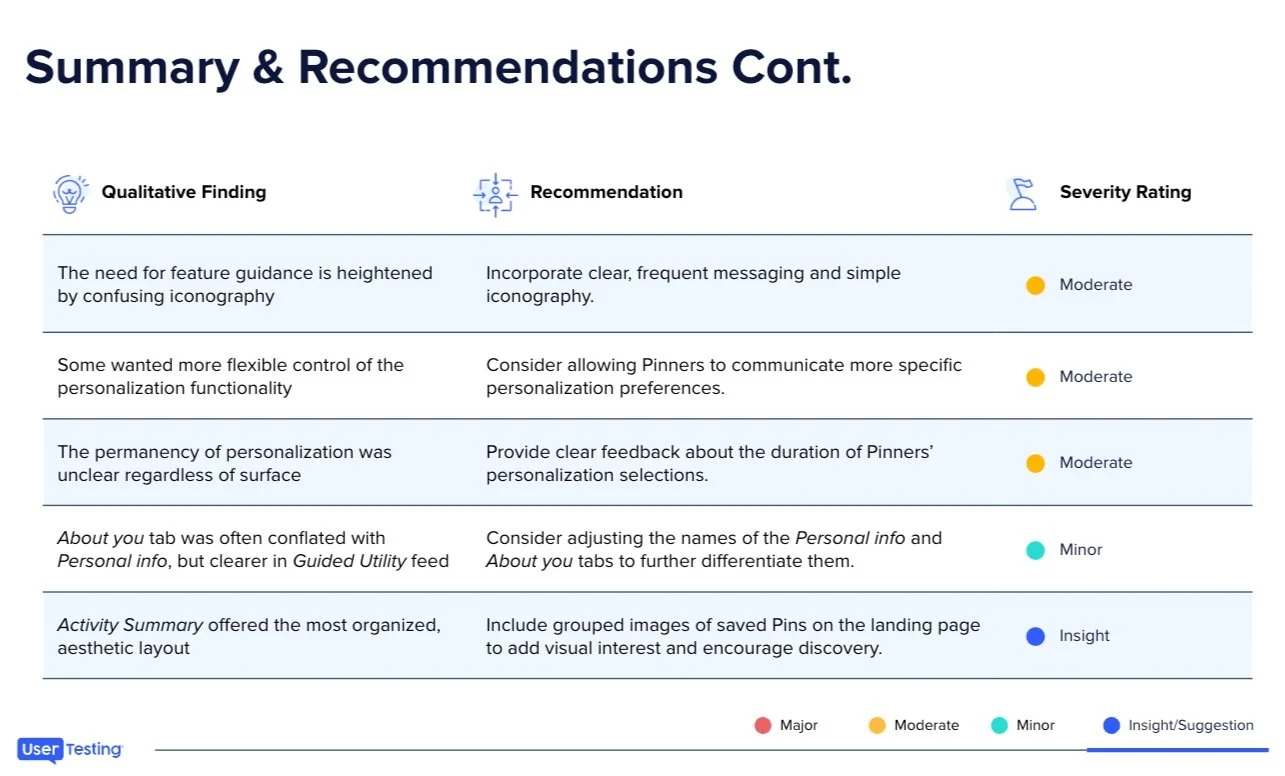

Summary of insights and associated recommendations ranked by severity

reflection

What I’d Change:

Narrow research scope toward either analyzing user behaviors or conducting feature preference testing to achieve more in-depth insights

Clearly communicate stakeholder expectations on session involvement to preserve moderator concentration

What went well:

Validated successful elements of the current in-product design, enabling the team to prioritize efforts more efficiently by eliminating unnecessary design changes

Pinpointed easily addressable issues that could quickly enhance the product’s performance

Thoroughly assessed successes and shortcomings of new designs based on user behavior, offering valuable insights to guide the product’s long-term development